That Conference 2017, Kalahari Resort, Lake Delton, WI

Intro to Docker – John Ptacek

Day 1, 7 Aug 2017

Disclaimer: This post contains my own thoughts and notes based on attending That Conference 2017 presentations. Some content maps directly to what was originally presented. Other content is paraphrased or represents my own thoughts and opinions and should not be construed as reflecting the opinion of the speakers.

Executive Summary

- Docker containers make deploying a running application easier and more repeatable

- Tooling exists to deploy to Azure or AWS

- Microsoft is definitely pushing Docker as a unit of deployment

Docker

- Not a programming language

- Not a framework

What Docker Is

- Virtualization technology

- Server -> VM -> PaaS -> Containers

- Tool for software

- Installation, removal, upgrading

- Distribution

- Trusting

- Managing

Docker

- Automates setup/configuration development environments

- E.g. Far quicker to get up and running with a typical server environment

- Happy pplace for developers and infrastructure

- Avoids typical friction between devops and devs

- Analogy for shipping containers

- Contains everything you need; container moved from place to place

- Encapsulation

- Native for Linux

- Linux’ containerization strategy became starting point for Docker

- Installs for OS X and Windows

- But a bit finicky sometimes

- It’s Plug In Software (rather than installed software)

- If it works on your dev machine, it will work anywhere

Why Docker?

- Resource efficiency

- 26-1 perf improvement over VM

- Speed

- Smaller units, quicker build/deploy

- Leads to talking about microservices

- Agile

- Still virtualization, Ship Code containers (ship containers)

- Lightweight

- Just Enough OS – only the bits you need in the OS are turned on

- Consistency

- Portability and reproducibility. DockerFile, not Click

- Not relying on how somebody does a particular install or configuration

- Works on my machine, then it works

- Microsoft says so

- Microsoft is moving a lot of stuff towards Docker

- SPS: How does this strategy fit in with Azure architectures

- SharePoint story

- Get things set up at home, but then have trouble rolling out SharePoint on customer’s server

- Because they don’t necessarily have the same stuff enabled on their server

- Environmental Drift

- Avoids having to set up big dev environment to go back and support something from several years ago

- Docker allows entire environment to be easily reproduced, using Docker File

- Cattle, not pets

- Care and feeding that happens when you set up server – pets

- With cattle, you don’t care that much if one of the servers drops out

Things

- Containers are not virtualization, as we know it

- Containers can be thought of as shipping containers

- Everything embedded that you need

- Containers contain images

- Images are bundled snapshot

- Images are deployable

- Can spin up in Azure, AWS, Google, or in-house

- Can also move these between platforms

- Containers don’t tie you to platform

- Compatibility between Oses

- Build on OS X, run on Windows

- What app store did for mobile

- Docker like multi-platform app store for developers

- Finicky for OS X and Windows

- Security by isolation from OS

- Can’t get from container back to host operating system

- Helps manage risk

- Images – immutable file system with execution parameters

- Containers – instantiations of images (running or inactive)

Demo – Simple node.js application

- Sends a message to console

Running it

- Can run on dev box directly, i.e. running Node

Running with Docker

- Dockerfile – contains instructions for how to run within container

- Pull down Node

- Make a directory for app, one for user

- Do npm install for dependent packages

- Copy source code down

- ENTRYPOINT – Says the command to run to start up container

- .dockerignore – what not to bring down

- e.g. node_modules

- Build the container

- docker build – it runs through docker file and sets everything up

- At the end, it gives you a number

- The container exists on the dev machine at this point

- Now run this image

- Can map docker’s port to port on host machine

- docker run

- Can hit the site at the new port

- Log on to container

- docker exec – runs bash shell

- docker ps

- List images that are running

Demo 2 – Calculating Pi

- Running locally – spits out a bunch of debug info to console

- docker run – can run in container

- Winston logger

- Log out to directory

- Can log to external location

- Typically, you wouldn’t persist data to the Docker container

- Because the data is lost when you power down the container

- You’d typically persist data elsewhere

Deploy container to production server

- DockerHub – place for you to deploy your applications to

- It’s not production yet

- First, test on your local machine

- Publish to DockerHub – docker login

- Tag the image that you created/tested

- docker push – push to docker hub

- Push to production, e.g. Azure

- Docker Container settings in Azure

- Should work the same on Azure

- Typically takes 10-15 mins to deploy out to Azure

- Can do the same for AWS–deploy the same container to AWS

- “A bit more painful”

- Use AWS command line tools (you have to install them)

- Quirky to get push to work

- Could even envision running the same app on both AWS and Azure

- More flexibility to react to down time

Things

- Docker pull – pull image from docker hub

- Docker run – run an image

- Docker images – list images

- Docker ps – list all running containers

- Docker rm – delete docker container

- Docker rmi – delete docker image

Questions

- More than just Azure and AWS?

- Yes, e.g. Google, or could build infrastructure in-house

- But simpler to deploy to Azure or AWS

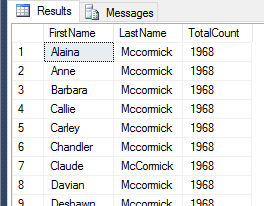

- (Sean): Would you typically run SQL Server inside a Docker container?

- Yes, you can definitely run SQL Server in a Docker container

- Difference between container and image?

- Container is sort of the definition of what needs to run, immutable

- Images are the pieces that start up

- The image is the running instance

- If I have various configurations for different environments, how does Docker file manage this?

- Yes, could use environment variables that get read in the dockerfile

- Why do you do node:latest, won’t this lead to trouble in the future?

- In production, you’d want to hard-code a version that you know works for you