hat Conference 2018, Kalahari Resort, Lake Delton, WI

Know What Your Code Is Doing to SQL Server – Kevin Boles

Day 1, 6 Aug 2018

Disclaimer: This post contains my own thoughts and notes based on attending That Conference 2018 presentations. Some content maps directly to what was originally presented. Other content is paraphrased or represents my own thoughts and opinions and should not be construed as reflecting the opinion of the speakers.

Kevin Boles

TheSQLGuru@gmail.com

Executive Summary

- Handful of specific examples of ways that you can end up with poorly performing SQL Server queries

- Improper use of Entity Framework sometimes to blame

- Always pay attention to exact T-SQL that is being used

Miscellaneous

- Recommend 192GB on server, go beyond max memory (128GB)

- In memory OLTP now down in standard edition

- SQL Server 2016–It Just Runs Faster

- Good blog posts on performance improvements

- Get on 2016 or 2017

Entity Framework is tool that simplifies, but lower performance

- Can be very productive

- Several traps, easy to fall into

- Other ORMs have similar problem

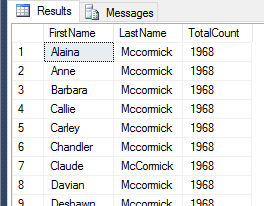

Being too greedy with rows

- EF exposes objects without knowing values

- E.g. pull data out to list, when do Where clause on the list

- Pulls entire table before filtering

- Do filtering on SQL Server

- Provided that you ave the proper filter

- Or could bring back to IQueryable

N+1 Select Problem–minimize trips to DB

- ANTS performance profiler

- E.g. One query to parent entities, 2nd query for each child to get related entities

- Round-trip for each child query

- Lazy Loading

- “N+1 select problem”

- If you know you want child data ahead of time, do the original query to include it

- Use Eager Loading

- .Where, .Include

- Make sure that you really need them

- Don’t do aggregation in client–do it in SQL Server

- E.g. if we’re just counting child objects, do it with aggregate function in SQL Server

- Don’t iterate and don’t get data that you don’t need

Being too Greedy with Columns

- E.g. pull all Pupils from school, then iterate to dump out certain stuff

- Selecting all columns when you just need subset of columns

- EF doesn’t know what columns you want

- Causes two problems

- More data than we need

- Impossible to index, since we pull everything back

- SELECT WITH NO LOCK

- If acceptable, can be faster because you don’t wait for confurrent transactions

- If you’re pulling all columns, you’re locking entire table for the length of that query

- Select new to just get you want

Mismatched Data Types

- If data types don’t match, even simple queries can perform poorly

- E.g. search for entities with particular zip code

- Dev system should match prodution system in terms of scale

- Find query runs fast, EF runs very slowly

- Query plan warning

- CONVERT_IMPLICIT

- Function on a column in a WHERE clause

- Column in database is VARCHART, but .NET has string, which is UTF16 Unicode

- SQL Server does

- INDEX SCAN of every zip code

- Big I/O cost

- Then converts the data–CPU hit

- Optimizer can’t do prediction because data is different than what’s in the index

- Solution

- Edit model to tell EF to use VARCHAR, using column annotation

- In code, attribute

- Do not let EF create models, code-first

ORM ?

- He recommends not using one

ADO.NET

- 3 things to remember about stuff on internet about SQL Server

- Who wrote it

- When was it written

- Does it apply to my system

- ADO.NET Parameterized Queries

- AddWithValue–get rid of this

Overly Generic Queries

- Allowing searching on multiple fields

- So we construct one query, where clauses for each search field

- == null or matches

- IS NULL OR stuff gets into query

- Bad because it builds query plan just once for all the possible search combinations

- Always runs same query plan, no matter what you search on

- Can’t do a seek on index

- Options

- Stored procedure — Gail – “catch all queries”

- Conditionals in EF side, exclude clauses

- Make SQL Server recompile plans each time–from within EF. Sometimes good

- Could write interceptor, option(recomiple)

Bloating the plan cache

- Reuse of execution plans often a good thing, avoid regenerating plan

- In order for a plan to be reused, the statement text must be textually identical, wihch as we just saw, is case for parameterized queries

- Ad-hoc workloads

Skip / Take

- OFFSET FETCH

- Next time we run query, we get different query plan

- Enable SQL Server setting ‘optimize for ad-hoc workloads ‘

- Less aggressive at caching plans, generally a good thing

- EF6, pass in lambdas

- In SQL, values are paremetrized, so we don’t recreate cache plan

Inserting data

- EF will run separate INSERT statements for every row being added

- Not good if you have to insert a lot of data

- Can use EF.BulkInsert or use EF 7 (has this out-of-the-box)

Code First, Performance Last

- Never allow your code to create your storage constructs

- (He means–code-first used to refresh database object)

Other Potential Issues

- Improper use of IN clauses

- >16 clauses in IN clause if bad

- Correlated subqueries instead of JOINs

- Parameterized queries with parameters defined to be length of data they contain

- Chatty apps

- Security conerns

- Increased IO and app bloat due to asking for too mcuh data