- Don’t fear the code. It’s just code.

- Never write (or copy) a line of code that you don’t fully understand.

- Figure out how things really work and why they work they way they do.

- If you don’t understand something, go deeper. Read the source code for the library you’re using.

- Use tools to figure out how things work–debugger, unit tests, sample programs.

- Create a punchlist to guide your work–investigations, things to do, things to test

- Test the hell out of your code. Be as thorough as possible

- When you get stuck, go for a 5 minute walk.

- When you get stuck, describe your stuckness out loud to somebody else.

- Write down what you’ve learned. Create a FAQ document and answer all the questions.

- Try to teach someone else what you’ve learned. Create a blog.

- Leave breadcrumbs for yourself and others–personal notes or comments in the code.

Miscellaneous

A Recipe for Green-Field Software Development

[Re-post from Nov, 2008]

Developing Your Product and Your Customers in Parallel

I’ve been a member of a software development team since 1985. That’s 23 years as a software developer.

Like many developers who have been around for a few years, some of my grey hair can be attributed to having worked on hellish projects, or on projects that failed outright. Over the years, I’ve gradually added to my mental list of “worst practices”—things that tend to lead to project failure, or at least hide a failing project until it’s too late to turn it into a successful one.

It’s much easier to compile a list of worst practices than it is to pick some “perfect” development process. But worst practices can lead to best practices simply by avoiding the bad practices. At a minimum, we should at least avoid making the same mistakes over and over again.

If I had to pick a single worst practice (there are many), it would be this:

Not building the product that the customer really needed

This happens all of the time. We (the developers) build a product that is bug-free, efficient, scalable, and does exactly what we intended it to do. We even occasionally get the work done in something close to the amount of time that we said it would take.

But our well-built software still fails—for a variety of reasons.

- It’s too hard to use

- Users are unable to use it efficiently/effectively

- It’s missing one or more critical features

- Users don’t have a need for the software in the first place

- It’s too expensive, given what it does

In order to be “successful”, software has to meet a critical need that the user has. Good software solves a pressing problem. Great software does so in a way that seems natural to the users.

So what do I mean by software “failure”? Simply put, “failed” software is: software that doesn’t get used.

What are the consequences of failure? For internal software projects, it means wasted time and energy that could have been spent on things that the organization does need. For consulting houses, it means possibly not getting hired back by the client, or seeing your reputation diminished. For ISVs developing software/services to sell, it means lost revenue or even bankruptcy.

For developers, failure means knowing that you’ve wasted your time, intellect and energy on something that no one is going to use. That’s no fun.

Our goal then as developers is to build great software. We want to see users working with our stuff, to see it making their lives better, and to see them excited about it. That’s the true Holy Grail that many of us work towards.

The Remedy

So how do we develop great software? We can’t all be Steve Jobs, or hire him into our organization. So without brilliant insight, how do we figure out what the users truly need?

To understand what users need—truly, madly, deeply—we need to think beyond developing products and start thinking about developing an understanding of our users.

This focus on a Customer Development Process, rather than a Product Development Process, is the point of the book The Four Steps to the Epiphany, by Steven Gary Blank.

Blank’s main thesis is that we should work towards an understanding of our customers and their needs much earlier in the development lifecycle. We need to fully understand what customers need and whether we can sell them our product before we go too far down the path of building that product.

Blank proposes a very detailed Customer Development Process and talks a little bit about when it should occur, relative to the typical phases of a Product Development Process. This is a little tricky. If we wait too long to learn about our customers, we risk building the wrong product. But if we talk to them too early, before we’ve had time to think a little bit about our vision, we’re not really innovating, but just trying to build what they tell us to build. That also can lead to failure.

The Product Development Process

Having worked as a software developer for so many years, I’ve seen lots of different software lifecycles. In my first job, with Control Data Corp in Bloomington, MN, we were a Dept of Defense shop and rigidly followed DOD-STD-2167A—a very rigidly-defined classic waterfall process. (Ask me about the 4-foot high Software Requirements Specification).

I’ve also done my share of agile development, working in groups that used various agile methods.

My main takeaway on software process is that it’s important to develop iteratively. For me, that’s the most critically important piece of the agile movement. Many of the other techniques, like pair programming and test driven development might be important, but don’t seem quite as critical as being able to build your product iteratively. Short iterations allow agility.

For me, one book that really made a lot of sense in laying out a framework for an iterative lifecycle was The Rational Unified Process Made Easy, by Per Kroll.

For a lot of people, when they think about RUP (Rational Unified Process), they think: heavyweight and lots of modeling/diagramming. But Kroll explains that RUP doesn’t necessarily mean heavy. He refers to the number of artifacts that you’re required to produce as your level of ceremony. And he describes RUP as:

An iterative approach with an adaptable level of ceremony

Again, iterative is the important part. An iterative approach with very little ceremony would basically be an agile methodology. But sometimes you work on projects that require a bit more ceremony—some project tracking, etc. On these projects, you can still be iterative, but with more ceremony. Here’s a picture:

RUP talks about general phases of the lifecycle being: Inception, Elaboration, Construction and Transition. But Kroll is quick to point out that this does not just map to the classic waterfall model. (Requirements, Analysis/Design, Implementation, Testing). Rather, you’d typically perform some of each of the classic waterfall activities during each iteration of RUP. You’d likely be doing a lot more requirements-gathering in your Inception phases, but you’d also be doing some implementation. And you’d spend a lot of time writing code during Construction phases, but you might also still be tweaking some requirements.

Here’s a nice picture of how RUP typically plays out. Again, note that the idea of iterations is important:

Personally, I like this view of the development lifecycle a lot. This model is how I think about software development. Work in an iterative fashion, but be aware of what main phase you’re in and adjust your activities accordingly.

Over the years, I’ve taken lots of notes to answer the question, “what activities should I be doing at each step”? Kroll has a lot of good information in his book, but I’ve borrowed liberally from other sources. What I ended up with was a fairly detailed “a la carte” list of activities that you might engage in across the lifecycle.

I say “a la carte” because every project will not engage in every step. But I think it’s a nice master list to draw from. And I think that I have the activities listed in a pretty reasonable order.

So here’s my list of high-level activities for the Product Development Lifecycle. (See below for more detail for each activity):

- Vision

- Initial Requirements Gathering (Inception)

- GUI Conceptualizing

- Identify Candidate Architecture

- Define Non-Functional Tasks

- Define Iterations (Project Planning)

- Write Development Plan

- Execute Elaboration iterations

- Write Business Plan

- Write Test Plan

- Write Deployment Plan

- Execute Construction Iterations

- Initiate Test Plan

- Deploy Alpha Release

- Write Customer Support Plan

- Execute Deployment Iteration

- Deploy Beta Release

- Launch Product

- Begin Planning Next Release

The Customer Development Process

I love the idea of the Customer Development Process, as Blank presents it in The Four Steps to the Epiphany. He talks about discovering who your customers are, figuring out what they need, and then testing your hypotheses. In other words—iterate not just on the product, but also on your model of who the customers are. This includes not just an understanding of the customers and their needs, but also gets into the actual selling proposition and marketing of your product.

Here are the top-level activities in Blank’s customer development process:

- Customer Discovery

- Customer Validation

- Customer Creation

- Company Building

Lining Things Up

The trick is to figure out how the Product Development Process and the Customer Development Process relate to each other. If you listed out the various phases of each process side by side, how would they line up? At what point in your product development process should you start the customer development process? Or should it be the other way around?

Here’s a first stab at lining the two processes up. My goal was to come up with a high-level roadmap for doing new (green field) development, which would cover developing both the product and the customers.

| Product Development Process |

Customer Development Process |

| Vision | |

| Initial Requirements Gathering | |

| GUI Conceptualizing | |

| Identify Candidate Architecture | |

| Define Non-Functional Tasks | |

| Define Iterations (Project Planning) | |

| Write Development Plan | |

| Execute Elaboration Iterations >> | Customer Discovery |

| Write Business Plan | |

| Write Test Plan | |

| Write Deployment Plan | |

| Execute Construction Iterations >> | Customer Validation |

| Initiate Test Plan | |

| Deploy Alpha Release >> | Customer Creation |

| Write Customer Support Plan | |

| Execute Deployment Iteration | |

| Deploy Beta Release | |

| Launch Product | |

| Begin Planning Next Release >> | Company Building |

| . |

Should I Keep Reading?

The rest of this post expands on each of the activities in the table above, listing details of what happens during each phase. This is the outline that I use when I’m trying to figure out “what to do next”.

Note again—this process is very much geared towards green-field development, and in a market where you are developing a product or service to sell to end-users.

Product Development Process—Details

Here’s the detailed breakdown of the steps involved in the Product Development Process. Much of this content comes from the books I list at the end of the article, primarily The Rational Unified Process Made Easy and Head First Software Development. But a lot of it is just my own concept of what you typically do during each phase.

Note that iterations occur in the Elaboration and Construction phases.

Vision

- Do a short description of a handful of possible products

- For each, describe:

- Market or market segment (which group of users, short description)

- What is the problem that they experience

- How painful to the users? What workarounds are in place?

- Short description of product that might help them solve this problem

- Short feature list (<10 items, single sentence)

- How does this product specifically solve the customer’s problem?

- Market or market segment (which group of users, short description)

- For each, describe:

- Expand on top one or two possible products.

(For each, expand information to include):- Write up SIMs (Specific Internet Market Segment, Walshpg 158..)

- Lots of details about the targeted user group

- Other user information

- How often do you expect each user group to user the product?

- Why might they stop using the product?

- Rough guess as to size of this market, e.g. # potential users?

- Are there other non-primary users?

(E.g. users who didn’t purchase, or just different group of users)- Also describe their “problem” and how product solves it

- Are they also potential buyers, or just users?

- What subset of the feature list might they use?

- What else differentiates them from primary user group?

- Also write up SIM for this user group

- Bluesky one or more revenue models. Answer for each:

- What would this customer pay for this product?

- Specify target price or range that might be tolerable

- One-time purchase or ongoing subscription? (or a combination)

- Pricing tiers? If so, describe

- Trial period? If so, describe

- If >1 user group, repeat for other groups

- What level of certainty do you have that they will purchase?

- Why might they not purchase? List reasons.

- Possible ways to mitigate non-purchase reasons.

- Does revenue model depend on continued use of the product?

- How will you collect money?

- What is your distribution channel? (i.e. how do they receive product)

- If ongoing subscription, describe what happens when they stop paying

(E.g. limited access)?

- What would this customer pay for this product?

- Revenue model/estimates (per yr)

- Make rough estimate of ongoing costs, per user or account

- List expected revenue per user, single or ongoing, per yr

- Starting with desired annual revenue, describe # customers required to meet goal

- Marketing / Sales vision

- How might product be branded?

- How is product positioned (e.g. tagline)

- How might you reach desired users? (make aware)

- What is selling proposition (argument to buy)?

- Competitive analysis

- Assess and summarize competitive products that do similar/same thing as yours

- What are their strengths/weaknesses?

- Where can you improve on them? To what degree?

- What are you missing that competitors might have?

- Write up SIMs (Specific Internet Market Segment, Walshpg 158..)

Initial Requirements Gathering

(Chap 2 of Head First Software Development)

- Generate quick list of basic ideas, 1-3 sentences each, Title/Description

- Ask user questions to flesh out the list

- Bluesky to generate feature lists (Title/Description)

- Use other people

- Okay to spit out non-functional stuff, like specific GUI thoughts, or architecture

- Build User Stories

- Describe one thing

- Language of the customer

- Title/Description

- 1-3 sentences

- Pull out non-customer stories, save for later (design)

- Refine initial set of user stories, w/customer feedback

- Provide estimates for each user story

- Include assumptions

- Team estimation and revision

- Clarify assumptions w/users, if necessary

- Break apart users stories that are >15 days

- Add up estimates to get estimate for total project

GUI Conceptualizing

- Select key use cases that need GUI concept

- User interaction goals

- Outline some key goals of user interaction model

(E.g. discoverability, efficiency, slick animation, etc) - Describe user action requirements verbally for specific use cases

- Outline some key goals of user interaction model

- Assess similar products’ GUIs for similar use cases

- Pros/cons of each

- For each use case selected

- Paper prototype one or more possible GUIs

- Storyboard any dynamic behavior

- Brainstorm alternative approaches and paper prototype

- Identify common sequences of use cases (e.g. find/edit)

- Storyboard use case sequences

- Brainstorm ways to optimize the storyboard/sequence

- Assess GUI model against several measures of good GUI design

- Technical review of GUI feasibility

- Build it, buy it, or freebie (part of tool or freeware)?

- If build, rough guess as to effort

- If buy, what is the cost? One-time & ongoing.

- Assess technical risk

- Are there any aspects of GUI that need to be proven out?

- Hammer looking for nails

- Make short list of most compelling GUI models in new products

- Is there anywhere in product that you might use these models?

- Brainstorm user assistance model (help, wizards, demos, etc.–learning)

- GUI models from competitors

- If there are competitive products that compete in same space, summarize their main GUI elements.

- Assess—is their GUI or user interaction model an asset or a liability?

- Review

- Early review internally (not w/customers)

- Review static screen mockups (e.g. paper prototypes)

- Look at discoverability–obvious what it’s used for?

- Aesthetics

Identify Candidate Architecture

- Identify one possible architecture that could support the product

- Identify any areas of technical risk

- Discuss pros/cons/risks

- [For more details, see Kroll]

Define Non-Functional Tasks

- Define additional tasks to be completed during inception that are NOT use cases

- Possible examples include:

- GUI proof-of-concept, for technical & usability

- Building candidate architecture (must do)

- Technical proof-of-concept

- Capacity testing for architecture (e.g. scalability)

- Provide priorities and estimates for all tasks

(will feed into iteration planning, along w/use cases)

Define Iterations

(Chap 3 of Head First Software Development)

- Set target date for first (next) release

- Work w/customer to prioritize user stories

- Also feed non-functional tasks into process

- Select subset of user stories to meet target date (Milestone 1)

- If the features don’t fit target date, re-prioritize

- Focus on user stories that are absolutely critical

- Baseline functionality–smallest set of features so that SW is at all useful to customers

- Prioritize user stories in Milestone 1.0 (1-5, with 1 as highest priority)

- Assign user stories to iterations, using 20-day iterations

- Based on priority

- Or user stories required to implement other user stories

- Use velocity of 0.7 (0.7days effort for each real day)

I.e. Each iteration should contain 14 person-days of estimated work

- Reassess schedule and adjust schedule and/or content

- Because of velocity, things likely can’t fit

- Add iteration(s), change M1 date, or both

- Get iterations & user stories into tracking spreadsheet

- Construct burn-down graph for 1st iteration

- X axis is calendar days

- Y axis is person-days of work accomplished

- Draw diagonal line as ideal burn-down rate

Write Development Plan

- Describe everything that will happen from here on out

- Include

- Iterations and contained user stories & tasks

- Milestones

- Review points and staff

- High-level test plan

- alpha/beta/release plan

- Staff

- Schedule

- Tools

- Show steps in Product Development process

- Show steps in Customer Development process

- Show how the two processes line up

- Include calendar alignment, if appropriate

Execute Elaboration Iterations

(Chap 4 of Head First Software Development – User stories & tasks)

- Break down each user story into tasks

- Title/Description/Estimate

- Provide estimate for each task

- E.g. Create class, create GUI prototype, create schema, create SQL scripts

- Each task should be 0.5 – 5 days

- Plot where you are on burn-down graph

- Calendar day vs. new estimate of person-days of work left

- Update spreadsheet w/tasks & their estimates

- Task estimates replace user story estimates

- Also track burn-down in spreadsheet

- Put stickies on big board, tasks, In Progress/Complete, etc. (pg 116 of Pilone/Miles)

- Start working on first tasks

- Move stickies on board when they are In Progress

- Daily stand-up meetings to track progress

- First thing in the morning

- Track progress–what has each person accomplished

- Update burn-down rate

- Update tasks

- What happened yesterday, what is plan for today

- Talk about any problems

- 5-15 mins long

- Add unplanned tasks to iteration, if necessary

- Add user story, estimate, break into tasks, review w/customer, add to board

- Use red stickies

- Update burn-down, showing that you’re off

- Next iteration

(Chap 10 of Head First Software Development)- End of iteration review (pg 342-343 of Pilone/Miles)

- Verify SW passes all tests

- Demo/review w/customer

- Plan next iteration

- Add new user stories, if required

- Update priority/estimates for everything

- Adjust velocity

- Feed in bug backlog as tasks

- Priority tradeoffs include bug-fixes vs. new features

- Follow steps from Pilone/Miles chap 4 (break down into tasks and estimate)

Write Business Plan

- Goal isn’t to get funding, but to make a case for the product/business

(at least on paper) - Include stuff like

- Market description

- Customer description

- Description of competitors’ products/services

- Product proposition

- What is customers’ problem?

- What is your vision for product/service

- How does product/service solve their problem?

- Outline of development plan

- Outline of Product/Customer Development processes

- Summarize revenue model

- Summarize marketing/sales plan

- Business structure: staffing, organization, etc.

- Add other typical business plan elements

Write Test Plan

- What to test, how to test, when to test

- Plan for test-driven development, using appropriate TDD tools

- Plan automated/nightly builds and automation of testing

- See Pilone/Miles, chap 7, among others

Write Deployment Plan

- How/when will software be deployed?

- Map out alpha/beta/launch

- Where does testing fit into the plan?

- How will customers get the software?

- How will they pay for it?

- How do you track customers?

Execute Construction Iterations

(Chap 4 of Head First Software Development – User stories & tasks)

- Break down each user story into tasks

- Title/Description/Estimate

- Provide estimate for each task

- E.g. Create class, create GUI prototype, create schema, create SQL scripts

- Each task should be 0.5 – 5 days

- Plot where you are on burn-down graph

- Calendar day vs. new estimate of person-days of work left

- Update spreadsheet w/tasks & their estimates

- Task estimates replace user story estimates

- Also track burn-down in spreadsheet

- Put stickies on big board, tasks, In Progress/Complete, etc. (pg 116 of Pilone/Miles)

- Start working on first tasks

- Move stickies on board when they are In Progress

- Daily stand-up meetings to track progress

- First thing in the morning

- Track progress–what has each person accomplished

- Update burn-down rate

- Update tasks

- What happened yesterday, what is plan for today

- Talk about any problems

- 5-15 mins long

- Add unplanned tasks to iteration, if necessary

- Add user story, estimate, break into tasks, review w/customer, add to board

- Use red stickies

- Update burn-down, showing that you’re off

- Next iteration

(Chap 10 of Head First Software Development)- End of iteration review (pg 342-343 of Pilone/Miles)

- Verify SW passes all tests

- Demo/review w/customer

- Plan next iteration

- Add new user stories, if required

- Update priority/estimates for everything

- Adjust velocity

- Feed in bug backlog as tasks

- Priority tradeoffs include bug-fixes vs. new features

- Follow steps from Pilone/Miles, chap 4 (break down into tasks and estimate)

Initiate Test Plan

- Begin regular testing

- Functional, integration, system test (no customer testing yet)

Deploy Alpha Release

- Outline goals for beta and exit criteria

- Identify potential customers/users

- Communicate w/users about goals & feedback mechanism

- Feeds into appropriate spot in Customer Development process

- Possibly at end of each construction iteration

- Gather feedback and feed into development

- E.g. Changes desired/required?

Write Customer Support Plan

- How will customers be supported after software is deployed?

- List main goal(s)

- Decide on tools, e.g.

- External issue tracking

- FAQ sheets for tech support staff

- Decide on process(es), e.g.

- Communication w/customer

- Looking for answer in FAQ

- How to communicate answer, if known

- Get closure

- 2nd tier–investigate, look for workaround

- Interaction between staff (e.g. support/development)

- 3rd tier–reported bug, e.g. give bug # and have traceback mechanism

- Escalation process

Execute Deployment Iteration

- Working on deployment tasks, e.g.

- Installs/uninstalls

- Distribution/delivery

- Payment

- Customer tracking

Deploy Beta Release

- Outline goals for beta and exit criteria

- Identify potential customers/users

- Communicate w/users about goals & feedback mechanism

- Feeds into appropriate spot in Customer Development process

- Likely after Deployment Iteration

- Gather feedback and feed into development

- E.g. Changes desired/required?

- Beta should not last indefinitely

Launch Product

- Announce product

- Launch it

- Throw a big party

Begin Planning Next Release

- Post-mortem for Product/Customer Development processes

- Lessons learned

- Changes to process

- Assess organize outstanding bugs

- Prioritize, estimate

- Organize list of possible future features

- Plan mechanism for using existing customers to get feedback on priorities

- Prioritize, estimate

- Decide on schedule for next release

- Map out iterations

- Each iteration either bug-fixing or new development

- Bug-fixing phase likely first

- Goal: All bugs fixed prior to next release

- Continued refinement of plan, based on ongoing customer feedback

- Back to start of Product/Customer Development processes for next release

(follow same processes, including updating relevant plans)

Customer Development Process—Details

Here’s the detailed breakdown of the steps involved in the Customer Development Process. This comes directly from Four Steps to the Epiphany.

Customer Discovery

- State hypotheses

- Write briefs, state assumptions about product, customers, pricing, demand, competitors

- Test problem hypotheses

- Test in front of potential customers

- Test product concept

- Test product features in front of customers

- Solves their problem?

- Also test business model

- Must-have?

- Pricing

- Distribution

- Verify

- You understand the customer’s problems

- Your product solves these problems

- Customers will pay for the product

- You have in mind a profitable business model

- Repeat if necessary

Customer Validation

- Get Ready to Sell

- Articulate value proposition

- Prep sales materials & collateral plan

- Develop distribution channel plan

- Develop sales roadmap

- Hire sales closer

- Synch up Product/Customer Dev teams on features/dates

- Formalize advisory board

- Sell to Visionary Customers

- Sell unfinished product

- Answer all sales roadmap questions

- Develop Positioning

- Initial positioning

- Articulate belief about product and its place in the market

- Verify

- Enough orders to prove we can sell?

- Profitable sales model?

- Profitable business model?

- Can you scale the business?

- Repeat, if necessary

Customer Creation

- Get Ready to Launch

- Market Type Questionnaire

- Choose Market Type

- Choose 1st Year Objectives

- Position company & product

- Select PR agency

- Positioning audits

- Positioning to market type

- Launch company & product

- Select launch type

- Select customer audience

- Select the messengers

- Craft the messages

- Message context

- Create demand

- Demand creation strategy

- Demand creation measurements

- Iterate or exit

Company Building

- Reach mainstream customers

- Change “earlyvangelists” into mainstream customers

- Manage sales growth by market type

- Review management / Create mission culture

- Review management

- Develop “mission-centric” culture

- Transition to functional departments

- Set department mission statement

- Set department roles by market type

- Build fast-response departments

- Implement mission-centric management

- Create an “information culture”

- Build a “leadership culture”

Closing Thoughts

The key takeaway for me from Blank’s book was as follows:

It’s not enough to build a great product. You must also build a product that the customers need and that they will buy. It’s also critical to find a workable business model to sell to the right customers at the right price.

If you haven’t yet read Four Steps to the Epiphany, I’d really encourage you to go out and read it. Then you can start thinking about how to develop your customers, as well as your product.

Sources

I’ve used the following sources, in varying degrees, for this post:

- IBM Rational Unified Process, Wikipedia.

- Four Steps to the Epiphany, Blank, cafepress.com, 2006.

- The Business of Software, Cusumano, Free Press, 2004.

- The Rational Process Made Easy: A Practitioner’s Guide to the RUP, Kroll, Addison-Wesley, 2003.

- Rapid Development, McConnell, Microsoft Press, 1996.

- Head First Software Development, Pilone & Miles, O’Reilly, 2008.

- Eric Sink on the Business of Software, Sink, Apress, 2006.

- Micro-ISV – From Vision to Reality, Walsh, Apress, 2006.

2000things.com

Just a quick shout-out to new followers of this blog. Also take a look at my other two (more active) blogs:

2,000 Things You Should Know About C#

2,000 Things You Should Know About WPF

Why I Turned Off WordAds

Below is a letter that I recently sent to WordPress support, on the occasion of my turning off WordAds on two of my blogs. The attachments that I mention, examples of ads on my blogs, appear after the content of the letter.

To: support@wordpress.com

23 Jan 2013

I host two blogs on WordPress for which I signed up in the WordAds program.

I’m going to disable WordAds in both cases, based on the type and style of ads that are being shown. But I thought it fair to share some thoughts with you, related to why I’m turning off WordAds.

The blogs are: csharp.2000things.com and wpf.2000things.com

Both of these blogs are quite small, in terms of traffic. The first blog gets around 10,000 hits/mon, with just under 12,000 impressions in the most recent month. The second blog is around 20,000 hits/mon, with just under 20,000 impressions in Nov, 2012.

Given how tiny these blogs are, in terms of traffic, I realize that WordPress won’t care all that much whether or not I host ads. But I’d still like to share some quick thoughts on the ads being served, since this applies to any of your blogs serving WordAds.

My main complaints with the ads being served are:

- They don’t look like ads (e.g. ad consists solely of embedded YouTube video)

- Ads are not separated from my blog content in any way, e.g. with a border

- Ads aren’t relevant to my readers

- Multiple ads leads to the web page being cluttered

- The visual style of the ads cheapens my blog / brand

What I’d hope for with ads are ads that follow the more traditional design guidelines of print ads a little more closely. I.e. They are clearly offset by a border and marked as an ad and they clearly contain a brand image for the item or service being advertised. Ads that contain other content, like images from YouTube videos and giant Download buttons, overpower the content of my blog and also confuse the reader by appearing to be part of my content, rather than an ad. (See attached images for examples, from my blog).

There are all sorts of ads served on the web. Some are very tasteful and elegant, some less so. I’m disappointed that the ads served up via WordAds are consistently in the latter category. An ad should not actively try to pull the reader’s attention from the content and it should definitely not try to “trick” the reader into doing so (e.g. large download buttons). Rather, professional ads should respect the reader by being clearly marked as an ad, displaying the relevant brand, and trusting that the reader will read the ad if they are interested in the product or service.

As a blog author, my main request in the area of ads is that they appear to be at least as professional and tasteful as my blog itself. Since the ads served by WordAds are failing to live up to that expectation, I’m going to turn them off. WordPress does host many blogs that I would consider to be well-designed and tasteful. Your themes are also beautifully designed, with a very professional look. But the ads that you’re currently serving don’t rise to that standard. They cheapen not only the individual blogs that serve the ads, but the WordPress brand itself.

I realize that serving web-based ads is a tricky business to be in. Wordpress needs to make money, as do the vendors serving the ads. And given the state of web-based ads, it’s easy to serve up the same sort of ads that the other guys serve. But given the strength and respectability of the WordPress brand, I would hope that you’d set the bar a little bit higher. WordPress is already a leader when it comes to hosting blogs. With some changes to the ads that you serve, you could also be a leader in the area of served ads, setting an example for other content producers on the web.

Thanks for taking the time to read this and to consider my thoughts.

Sean Sexton

sean@seans.com

The Latest on HP TouchPad Availability

I just received the following e-mail update on HP TouchPad availability. I think I would have payed $99 for a TouchPad when it was first discounted, but after hearing so many stories of sluggishness and other problems, I’m unlikely to get one now–even at $99.

—E-Mail from HP—

Dear Customer,

Thank you for your interest in the HP TouchPad and webOS. The overwhelming demand for this product in recent days has made it difficult to fulfill your request at the present time, and we are working to make more available as soon as possible. While we do not yet have specific details, we know it will be at least a few weeks before we have a limited quantity available again. We will keep you informed as we have more specifics that we can communicate, and we encourage you to join the conversation here for the latest information.

In light of this and other recent HP news, we want you to know that we remain committed to you. We will continue to honor our warranties now and in the future. We will continue delivering products that make a difference in your life and we will continue to provide the best possible service to you every day.

We are grateful for your patience and loyalty and to show our gratitude, we are offering you an exclusive one-time opportunity to save an additional amount on all of our products, valid through the end of the day tomorrow, Wednesday, August 31, 2011. You’ll save 25% off all printers, ink, HP accessories, and PCs starting at or above $599. To take advantage of this offer, use your unique coupon code 4856696 at checkout, and for more information on the details of this offer, please look at the bottom of this email.

Sincerely,

hpdirect.com

—E-Mail from HP—

MDC 2010 Takeaways

I attended the Minnesota Developers Conference (MDC 2010) yesterday in Bloomington, MN. A nice dose of conference-motivation–some good speakers talking about great technologies. In the FWIW category, here are my lists of takeaways for the talks that I attended.

1. Keynote – Rocky Lhotka (Magenic)

General overview of development landscape today, especially focused on cloud computing and the use of Silverlight. Takeaways:

- We’re finally getting to a point where we can keep stuff in “the cloud”, access anywhere, from any device

- Desire to access application data in the cloud, from any device, applies not just to consumer-focused stuff, but also to business applications

- Smart client apps, as opposed to just web-based, are important/desired – intuitive GUI is how you differentiate your product and what users now expect

- HTML5 is on the way, will enable smart client for web apps

- Silverlight here today, enables smart clients on most devices (not iPhone/IOS)

- Silverlight/WPF is ideal solution. You write .NET code, reuse most GUI elements on both thick clients (WPF) running on Windows, and thin clients (Silverlight) running web-based or on mobile devices

- I didn’t realize that I have in common with Rocky: working on teletypes, DEC VAX development, Amiga development. :O)

- http://www.lhotka.net/weblog/ , @RockyLhotka (Twitter)

2. WPF with MVVM From the Trenches – Brent Edwards (Magenic)

Practical tips for building WPF applications based on MVVM architecture. What is the most important stuff to know? Excellent talk. Takeaways:

- MVVM excellent pattern for separating UI from behavior. Benefits: easier testing, clean architecture, reducing dependencies

- MVVM is perfect fit for WPF apps, very often used for WPF/Silverlight

- MVVM perfect fit for WPF/Silverlight, makes heavy use of data binding

- Details of how to do data binding in MVVM, for both data and even for command launching

- How to use: data binding, DataContext, Commanding, data templates, data triggers, value converters. (Most often used aspects of WPF)

- Showed use of message bus, centralized routing of messages in typical MVVM application. Reduces coupling between modules. (aka Event Aggregator). Used Prism version.

- Slide deck – http://www.slideshare.net/brentledwards/wpf-with-mvvm-from-the-trenches

- http://blog.edwardsdigital.com/, @brentledwards (Twitter)

3. Developer’s Guide to Expression Blend – Jon von Gillern (Nitriq)

Demoing use of Expression Blend for authoring UI of WPF/Silverlight apps. Also demoed Nitriq/Atomiq tools. Takeaways:

- Blend not just for designers; developers should make it primary tool for editing GUI—more powerful than VStudio

- Lots of tricks/tips/shortcuts – he handed out nice cheatsheet – http://blog.nitriq.com/content/binary/DevelopersGuidetoBlend.pdf

- Very easy to add simple effects (e.g. UI animations) to app elements to improve look/feel, just drag/drop

- Nitriq – tool for doing basic code metrics, summaries, visualize code, queries that look for style stuff. (Free for single assembly, $40 for full )

- Atomiq – find/eliminate duplicate code, $30

- http://blog.nitriq.com/, @vongillern (Twitter)

4. Introduction to iPhone Development – Damon Allison (Recursive Awesome)

Basic intro to creating iPhone app, showing the tools/language/etc. From a .NET developer’s perspective. Takeaways:

- You have to do the dev work on a Mac—no tools for doing the work on Windows

- The tools are archaic, hard to work with, much lower level than .NET. (E.g. no memory management)

- In many cases, consider creating web-baesd mobile app, rather than native iPhone. But then you wrestle with CSS/browser issues

- Worth considering creation of native iPhone app for the best user experience

- Lots of crestfallen-looking .NET developers in the audience

- http://www.recursiveawesome.com/blog/ , damonallison (Twitter)

5. A Lap Around Prism 4.0 – Todd Van Nurden (Microsoft)

Showing Prism—a free architectural framework written by Microsoft, came out Patterns and Practices group. Good for creating extensible apps, with plug-in model. Takeaways:

- Leverages MEF (Microsoft Extensibility Framework)

- Good for apps where you have the idea of a lot of “tools” that plug into main application architecture. Or for applications made up of various building blocks.

- You write application modules that are decoupled from main app framework, loaded on demand.

- Prism on Codeplex – http://compositewpf.codeplex.com/

- http://www.spoke.com/info/p5rAVze/ToddVanNurden

The Baby Has Two Eyeballs

Driving home the other night, I was teasing my 4-yr old daughter (as I often do). We were talking about what sorts of games to play when we got home and I suggested that we could spend our time poking Lucy’s baby brother Daniel in the eye. I also pointed out that we’d have to take turns and asked Lucy which of us should go first.

Lucy protested. “Daddy”, she said excitedly, “we don’t have to take turns–Daniel has two eyeballs”!

After I stopped laughing at this, I realized that Lucy had seen something very important, which hadn’t even occurred to me:

Poking a baby in the eye is something that can be done in parallel!

Yes, yes, of course–you should never poke a baby in the eye. And if you have a pre-schooler, you should never suggest eye-poking as a legitimate game to play, even jokingly. Of course I did explain to Lucy that we really can’t poke Daniel in the eye(s).

But Lucy’s observation pointed out to me how limited I’d been in my thinking. I’d just assumed that poking Daniel in the eye was something that we’d do serially. First I would poke Dan in the eye. And then, once finished, Lucy would step up and take her turn poking Dan in the eye. Lucy hadn’t made this assumption. She immediately realized that we could make use of both eyeballs at the same time.

There’s an obvious parallel here to how we think about writing software. We’ve been programming on single-CPU systems for so long, that we automatically think of an algorithm as a series of steps to be performed one at a time. We all remember the first programs that we wrote and how we learned about how computers work. The computer takes our list of instructions and patiently executes one at a time, from top to bottom.

But, of course, we no longer program in a single-CPU environment. Most of today’s desktop (and even laptop) machines come with dual-core CPUs. We’re also seeing more and more quad-core machines appear on desktops, even in the office. We’ll likely see an 8-core system from Intel in early 2010 and even 16-core machines before too long.

So what does this mean to the average software developer?

We need to stop thinking serially when writing new software. It just doesn’t cut it anymore to write an application that does all of its work in a single thread, from start to finish. Customers are going to be buying “faster” machines which are not technically faster, but have more processors. And they’ll want to know why your software doesn’t run any faster.

As developers, we need to start thinking in parallel. We have to learn how to decompose our algorithms into groups of related tasks, many of which can be done in parallel.

This is a paradigm shift in how we design software. In the same way that we’ve been going through a multiprocessing hardware revolution, we need to embark on a similar revolution in the world of software design.

There are plenty of resources out there to help us dive into the world of parallel computing. A quick search for books reveals:

Introduction to Parallel Computing – Grama, Karypis, Kumar & Gupta, 2003.

The Art of Multiprocessor Programming – Herlihy & Shavit, 2008.

Principles of Parallel Programming – Lin & Snyder, 2008.

Patterns for Parallel Programming – Mattson, Sanders & Massengill, 2004.

The following paper is also a good overview of hardware and software issues:

The Landscape of Parallel Computing Research: A View from Berkeley – Asanovic et al, 18 Dec 2006.

My point here is this: As a software developer, it’s critical that you start thinking about parallel computing–not just as some specialized set of techniques that you might use someday, but as one of the primary tools in your toolbox.

After all, today’s two-eyed baby will be sporting 16 eyes before we know it. Are you ready to do some serious eye-poking?

Learning Out Loud

I’ve always sort of figured that this blog was a place to post things I was just learning, rather than a place to publish tutorials about technologies that I have more expertise in.

Because of the nature of our field, and the amount of new technologies that are always coming out, I’m far more interested in learning than I am in teaching. There are already plenty of great teachers out there who are blogging, writing or lecturing. I don’t really aspire to sell myself as a teacher of technologies—that would take far too much time and energy.

Instead, I see this blog as a forum for my attempts to learn new technologies—e.g. WPF and Silverlight. I’m always looking for new ways to motivate myself to learn new technologies and having a blog is a good way to force myself to dive in and start learning something new. When I realize that I haven’t posted anything for a few days, I feel the urge to start pulling together the next post. Then, because I know I’m going to have to write about it, I find that I force myself to explore whatever the topic is in a much deeper manner than I would if I were just reading a book or attending a class.

This has been working out great so far. I’m discouraged by how little time I have to study these new technologies. I’d like to post far more frequently than I do. But at least I’m gradually learning some new bits and pieces, about technologies like WPF and Silverlight.

Reminding myself of my goals also helps me to just relax and not worry so much about making mistakes. I’m just capturing on “paper” what I’m learning, as I learn it. Since I’m only beginning the journey of grokking whatever it is, I don’t need to worry about whether I get it right or not.

Remembering all of this led me to change the tagline of this blog. Instead of offering up my thoughts on various topics, I now see this as “learning out loud”. That perfectly describes what I think I’m doing—learning new stuff, stumbling through it, and capturing the current state of my knowledge so that I can come back and refer to it later.

So let the journey continue—there’s still so much to learn!

Using HttpWebRequest for Asynchronous Downloads

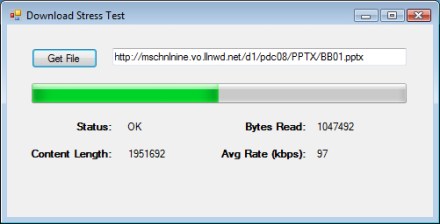

I’ve occasionally had a desire to test downloading a file via HTTP or FTP from a server—either to measure performance, or to stress test the server by kicking off a large number of simultaneous downloads. Here’s a little Win Forms client that allows you to download a single file from a server, using either HTTP or FTP. It shows download progress and displays the average transfer rate, in kb/sec. It also demonstrates how to use the HttpWebRequest and FtpWebRequest classes in System.Net to do file downloads.

As an added bonus, this app is a nice example of doing a little work on a background thread and then firing callbacks back to the GUI thread to report progress. This is done using the BeginXxx/EndXxx pattern, as well as using the Invoke method the ensure that GUI updating is done on the correct thread. I always forget the exact syntax for this, so it’s nice to have it here to refer to.

The bulk of this code comes directly from the MSDN documentation for the HttpWebRequest.BeginGetResponse method. I’ve created a little client app around it, adding some GUI elements to show progress. I’ve also extended it to support downloads using FTP.

I include code snippets in this article, but you can download the entire Visual Studio 2008 solution here.

The End Result

When we’re done, we’ll have a little Win Forms app that lets us enter an HTTP or FTP path to a file and then downloads that file. During the download, we see the progress, as well as the average transfer rate.

For the moment, the application doesn’t actually write the file locally. Instead, it just downloads the entire file, throwing away the data that it downloaded. The intent is to stress the server and measure the transfer speed—not to actually get a copy of the file.

If we were to use HTTP and specify an HTML file to download, we’d basically be doing the same thing that a web browser does—downloading a web page from the server to the client. In the example above, I download a 1.9MB Powerpoint file from the PDC conference, just so that we have something a little larger than a web page and can see some progress.

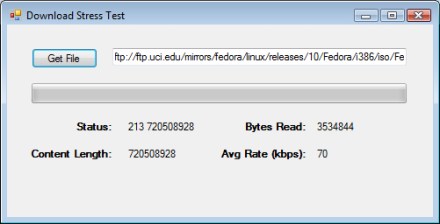

Using FTP Instead of HTTP

My little application does FTP, as well as HTTP. If you enter an FTP-based URI, rather than an HTTP-based one, we automatically switch to using FTP to download the file. Before the download can start, however, we need to ask the user for credentials to use to log into the FTP site.

Once we’ve gotten the FTP credentials, the download runs in much the same way that the HTTP-based download ran.

In this case, I’m downloading an ISO image of the first CD of a Fedora distribution. Note that the FTP response string starts with “213”, which gives file status and represents a successful response from the FTP server. The second part of the response string is the size of the file, in bytes. In the case of HTTP, the response was just “OK”.

Where Are We?

So what do we really have here? A little program that downloads a single file without really writing it anywhere. At this point, we have something that’s mildly useful for testing a server, since it tells us the transfer rate. Furthermore, we can launch a bunch of these guys in parallel and download the same file many times in parallel, to stress the server. (Ideally, the application would let us pick #-of-simultaneous-downloads and just kick them all off, but that’s an enhancement to be added later).

Diving Into the Source Code

More interesting than what this little program does is how you go about using the HttpWebRequest and FtpWebRequest classes to do the actual work.

Here’s a quick look at the C# solution:

There’s really not much here—the main form (DownloadStressTestForm), the FTP credentials form (GetCredentialsForm) and a little helper class used to pass data around between asynchronous methods.

Most of the code lives in DownloadStressTestForm.cs. Ideally, we’d split this out into the GUI pieces and the actual plumbing code that does the work of downloading the files. But this is just a quick-and-dirty project.

Push the Button

Let’s take a look at the code that fires when you click the Get File button.

private void btnGetFile_Click(object sender, EventArgs e)

{

try

{

lblDownloadComplete.Visible = false;

WebRequest req = null;

WebRequestState reqState = null;

Uri fileURI = new Uri(txtURI.Text);

if (fileURI.Scheme == Uri.UriSchemeHttp)

{

req = (HttpWebRequest)HttpWebRequest.Create(fileURI);

reqState = new HttpWebRequestState(BUFFER_SIZE);

reqState.request = req;

}

else if (fileURI.Scheme == Uri.UriSchemeFtp)

{

// Get credentials

GetCredentialsForm frmCreds = new GetCredentialsForm();

DialogResult result = frmCreds.ShowDialog();

if (result == DialogResult.OK)

{

req = (FtpWebRequest)FtpWebRequest.Create(fileURI);

req.Credentials = new NetworkCredential(frmCreds.Username, frmCreds.Password);

reqState = new FtpWebRequestState(BUFFER_SIZE);

// Set FTP-specific stuff

((FtpWebRequest)req).KeepAlive = false;

// First thing we do is get file size. 2nd step, done later,

// will be to download actual file.

((FtpWebRequest)req).Method = WebRequestMethods.Ftp.GetFileSize;

reqState.FTPMethod = WebRequestMethods.Ftp.GetFileSize;

reqState.request = req;

}

else

req = null; // abort

}

else

MessageBox.Show("URL must be either http://xxx or ftp://xxxx");

if (req != null)

{

reqState.fileURI = fileURI;

reqState.respInfoCB = new ResponseInfoDelegate(SetResponseInfo);

reqState.progCB = new ProgressDelegate(Progress);

reqState.doneCB = new DoneDelegate(Done);

reqState.transferStart = DateTime.Now;

// Start the asynchronous request.

IAsyncResult result =

(IAsyncResult)req.BeginGetResponse(new AsyncCallback(RespCallback), reqState);

}

}

catch (Exception ex)

{

MessageBox.Show(string.Format("EXC in button1_Click(): {0}", ex.Message));

}

}

The basic goal here is to create an instance of either the HttpWebRequest or FtpWebRequest class. This is done by calling the corresponding Create method, and passing it the URI that the user entered. Note that we use the Uri class to figure out if the user is entering an HTTP or an FTP URI. We create an instance of the base class, WebRequest, which we’ll use to kick everything off.

We also create an instance of a class used to store some state information, either HttpWebRequestState or FtpWebRequestState. These classes both derive from WebRequestState and are defined in this project, in WebRequestState.cs.

The idea of this state object is that we’ll hand it off to the asynchronous method that we use to do the actual download. It then will get passed back to the callback that fires when an asynchronous method completes. Think of it as a little suitcase of stuff that we want to carry around with us and hand off between the asynchronous methods.

Notice that if we’re doing an FTP transfer, we first pop up the credentials dialog to get the Username and Password from the user. We then store those credentials in the FtpWebRequest object.

There’s one other difference between HTTP and FTP. In the case of HTTP, we’ll fire off a single web request, with a GET command, to download the file. But for FTP, we actually first send a command to read the file size, followed by the command to actually download the file. To accomplish this, we set the Method property of the FtpWebRequest to WebRequestMethods.Ftp.GetFileSize. We don’t set this property for HttpWebRequest because it just defaults to the GET command, which is what we want.

Towards the end of this function, you’ll see that I’m loading up the suitcase—setting the various properties of the WebRequestState object. Along with the URI, we set up some delegates to point back to three callbacks in the DownloadStressTestForm class—SetResponseInfo, Progress, and Done. These are the callbacks that actually update our user interface—when things start, during the file transfer, and when the file has finished downloading.

Finally, we call the BeginGetResponse method to actually launch the download. Here, we specify a response callback—the method that will get called, not when the download has completed, but just when we get the actual HTTP response, or when the FTP command completes. In the case of HTTP, we first get the response packet and then start reading the actual file using a stream that we get from the response.

What’s important here is that we push the work into the background, on another thread, as soon as possible. We don’t do much work in the button click event handler before calling BeginGetResponse. And this method is asynchronous—so we return control to the GUI immediately. From this point on, we will only update the GUI in response to a callback.

Callbacks to Update the GUI

I mentioned the three callbacks above that we use to update the user interface—SetResponseInfo, Progress, and Done. Here’s the declaration of the delegate types:

public delegate void ResponseInfoDelegate(string statusDescr, string contentLength);

public delegate void ProgressDelegate(int totalBytes, double pctComplete, double transferRate);

public delegate void DoneDelegate();

And here are the bodies of each of these three callbacks, as implemented in DownloadStressTestForm.

/// <summary>

/// Response info callback, called after we get HTTP response header.

/// Used to set info in GUI about max download size.

/// </summary>

private void SetResponseInfo(string statusDescr, string contentLength)

{

if (this.InvokeRequired)

{

ResponseInfoDelegate del = new ResponseInfoDelegate(SetResponseInfo);

this.Invoke(del, new object[] { statusDescr, contentLength });

}

else

{

lblStatusDescr.Text = statusDescr;

lblContentLength.Text = contentLength;

}

}

/// <summary>

/// Progress callback, called when we've read another packet of data.

/// Used to set info in GUI on % complete & transfer rate.

/// </summary>

private void Progress(int totalBytes, double pctComplete, double transferRate)

{

if (this.InvokeRequired)

{

ProgressDelegate del = new ProgressDelegate(Progress);

this.Invoke(del, new object[] { totalBytes, pctComplete, transferRate });

}

else

{

lblBytesRead.Text = totalBytes.ToString();

progressBar1.Value = (int)pctComplete;

lblRate.Text = transferRate.ToString("f0");

}

}

/// <summary>

/// GUI-updating callback called when download has completed.

/// </summary>

private void Done()

{

if (this.InvokeRequired)

{

DoneDelegate del = new DoneDelegate(Done);

this.Invoke(del, new object[] { });

}

else

{

progressBar1.Value = 0;

lblDownloadComplete.Visible = true;

}

}

This is pretty simple stuff. To start with, notice the common pattern in each method, where we check InvokeRequired. Remember the primary rule about updating controls in a user interface and asynchronous programming: the controls must be updated by the same thread that created them. InvokeRequired tells us if we’re on the right thread or not. If not, we use the Invoke method to recursively call ourselves, but on the thread that created the control (the one that owns the window handle).

Make note of this InvokeRequired / Invoke pattern. You’ll use it whenever you’re doing background work on another thread and then you want to return some information back to the GUI.

The work that these callbacks do is very simple. SetResponseInfo is called when we first get the reponse packet, as we start downloading the file. We get an indication of the file size, which we write to the GUI. Progress is called for each packet that we download. We update the labels that indicate # bytes received and average transfer rate, as well as the main progress bar. Done is called when we’re all done transfering the file.

The Response Callback

Let’s go back to where we called the WebRequest.BeginGetResponse method. We we called this method, we specified our RespCallback as the method to get invoked when the response packet was received. Here’s the code:

/// <summary>

/// Main response callback, invoked once we have first Response packet from

/// server. This is where we initiate the actual file transfer, reading from

/// a stream.

/// </summary>

private static void RespCallback(IAsyncResult asyncResult)

{

try

{

// Will be either HttpWebRequestState or FtpWebRequestState

WebRequestState reqState = ((WebRequestState)(asyncResult.AsyncState));

WebRequest req = reqState.request;

string statusDescr = "";

string contentLength = "";

// HTTP

if (reqState.fileURI.Scheme == Uri.UriSchemeHttp)

{

HttpWebResponse resp = ((HttpWebResponse)(req.EndGetResponse(asyncResult)));

reqState.response = resp;

statusDescr = resp.StatusDescription;

reqState.totalBytes = reqState.response.ContentLength;

contentLength = reqState.response.ContentLength.ToString(); // # bytes

}

// FTP part 1 - response to GetFileSize command

else if ((reqState.fileURI.Scheme == Uri.UriSchemeFtp) &&

(reqState.FTPMethod == WebRequestMethods.Ftp.GetFileSize))

{

// First FTP command was GetFileSize, so this 1st response is the size of

// the file.

FtpWebResponse resp = ((FtpWebResponse)(req.EndGetResponse(asyncResult)));

statusDescr = resp.StatusDescription;

reqState.totalBytes = resp.ContentLength;

contentLength = resp.ContentLength.ToString(); // # bytes

}

// FTP part 2 - response to DownloadFile command

else if ((reqState.fileURI.Scheme == Uri.UriSchemeFtp) &&

(reqState.FTPMethod == WebRequestMethods.Ftp.DownloadFile))

{

FtpWebResponse resp = ((FtpWebResponse)(req.EndGetResponse(asyncResult)));

reqState.response = resp;

}

else

throw new ApplicationException("Unexpected URI");

// Get this info back to the GUI -- max # bytes, so we can do progress bar

if (statusDescr != "")

reqState.respInfoCB(statusDescr, contentLength);

// FTP part 1 done, need to kick off 2nd FTP request to get the actual file

if ((reqState.fileURI.Scheme == Uri.UriSchemeFtp) && (reqState.FTPMethod == WebRequestMethods.Ftp.GetFileSize))

{

// Note: Need to create a new FtpWebRequest, because we're not allowed to change .Method after

// we've already submitted the earlier request. I.e. FtpWebRequest not recyclable.

// So create a new request, moving everything we need over to it.

FtpWebRequest req2 = (FtpWebRequest)FtpWebRequest.Create(reqState.fileURI);

req2.Credentials = req.Credentials;

req2.UseBinary = true;

req2.KeepAlive = true;

req2.Method = WebRequestMethods.Ftp.DownloadFile;

reqState.request = req2;

reqState.FTPMethod = WebRequestMethods.Ftp.DownloadFile;

// Start the asynchronous request, which will call back into this same method

IAsyncResult result =

(IAsyncResult)req2.BeginGetResponse(new AsyncCallback(RespCallback), reqState);

}

else // HTTP or FTP part 2 -- we're ready for the actual file download

{

// Set up a stream, for reading response data into it

Stream responseStream = reqState.response.GetResponseStream();

reqState.streamResponse = responseStream;

// Begin reading contents of the response data

IAsyncResult ar = responseStream.BeginRead(reqState.bufferRead, 0, BUFFER_SIZE, new AsyncCallback(ReadCallback), reqState);

}

return;

}

catch (Exception ex)

{

MessageBox.Show(string.Format("EXC in RespCallback(): {0}", ex.Message));

}

}

The first thing that we do in this method is to open our suitcase–our WebRequestState object, which comes back in the AsyncState property of the IAsyncResult.

The other main thing that we do in this method is to get the actual WebResponse object. This contains the information that we actually got back from the server. We do this by calling the EndGetResponse method.

Notice the standard Begin/End pattern for asynchronous programming here. We could have done all of this synchronously, by calling GetResponse on the original HttpWebRequest (or FtpWebRequest object). GetResponse would have returned an HttpWebResponse (or FtpWebResponse object). Instead, we call BeginGetResponse to launch the asynchronous method and then call EndGetResponse in the callback to get the actual result—the WebResponse object.

At this point, the first thing that we want from the response packet is an indication of the length of the file that we’re downloading. We get that from the ContentLength property.

It’s also at this point that we call the ResponseInfo delegate, passing it the status string and content length, to update the GUI. (Using the respInfoCB field in the WebRequestState object).

Let’s ignore FTP for the moment and look at the final main thing that we do in this method—get a stream object and kick off a read of the first packet. We get the stream from that WebReponse object and then go asynchronous again by calling the BeginRead method. Are you seeing a pattern yet? Again, if we wanted to do everything synchronously, we could just set up a loop here and call the stream’s Read method to read each buffer of data. But instead, we fire up an asynchronous read, specifying our method that should be called when we get the first packet/buffer of data—ReadCallback.

FTP Download, Step 2

Let’s go back to how we’re doing FTP. Remember that we set the FtpWebRequest.Method property to GetFileSize. And in ReadCallback, if we see that we just did that first command, we send the file size back to the GUI. And then we’re ready to launch the 2nd FTP command, which is DownloadFile. We do this by creating a 2nd FtpWebRequest and calling the BeginGetResponse method again. And once again, when the asynchronous method completes, we’ll get control back in ReadCallback. We don’t risk recursing indefinitely because we store an indication of which command we’re doing in our suitcase—in WebRequestState.FTPMethod.

Gettin’ the Data

Finally, let’s take a look at the code where we actually get a chunk of data from the server. First, a quick note about buffer size. Notice that when I called BeginRead, I specified a buffer size using the BUFFER_SIZE constant. For the record, I’m using a value of 1448 here, which is based on the size of a typical TCP packet (packet size less some header info). We could really use any value here that we liked—it just seemed reasonable to ask for the data a packet at a time.

Here’s the code for our read callback, which first fires when the first packet is received, after calling BeginRead.

/// <summary>

/// Main callback invoked in response to the Stream.BeginRead method, when we have some data.

/// </summary>

private static void ReadCallback(IAsyncResult asyncResult)

{

try

{

// Will be either HttpWebRequestState or FtpWebRequestState

WebRequestState reqState = ((WebRequestState)(asyncResult.AsyncState));

Stream responseStream = reqState.streamResponse;

// Get results of read operation

int bytesRead = responseStream.EndRead(asyncResult);

// Got some data, need to read more

if (bytesRead > 0)

{

// Report some progress, including total # bytes read, % complete, and transfer rate

reqState.bytesRead += bytesRead;

double pctComplete = ((double)reqState.bytesRead / (double)reqState.totalBytes) * 100.0f;

// Note: bytesRead/totalMS is in bytes/ms. Convert to kb/sec.

TimeSpan totalTime = DateTime.Now - reqState.transferStart;

double kbPerSec = (reqState.bytesRead * 1000.0f) / (totalTime.TotalMilliseconds * 1024.0f);

reqState.progCB(reqState.bytesRead, pctComplete, kbPerSec);

// Kick off another read

IAsyncResult ar = responseStream.BeginRead(reqState.bufferRead, 0, BUFFER_SIZE, new AsyncCallback(ReadCallback), reqState);

return;

}

// EndRead returned 0, so no more data to be read

else

{

responseStream.Close();

reqState.response.Close();

reqState.doneCB();

}

}

catch (Exception ex)

{

MessageBox.Show(string.Format("EXC in ReadCallback(): {0}", ex.Message));

}

}

As I’m so fond of saying, this is pretty simple stuff. Once again, we make use of recursion, because we’re asynchronously reading a packet at a time. We get the stream object out of our suitcase and then call EndRead to get an indication of how many bytes were read. This is the indicator that will tell us when we’re done reading the data—in which case # bytes read will be 0.

If we’re all done reading the data, we close down our stream and WebResponse object, before calling our final GUI callback to tell the GUI that we’re done.

But if we did read some data, we first call our progress callback to tell the GUI that we got another packet and then we fire off another BeginRead. (Which will, of course, lead to our landing back in the ReadCallback method when the next packet completes).

You can see that we’re passing back some basic info to the GUI—total # bytes read, the % complete, and the calculated average transfer rate, in KB per second.

If we actually cared about the data itself, we could find it in our suitcase—in WebRequestState.bufferRead. This is just a byte array that we specified when we called BeginRead. In the case of this application, we don’t care about the actual data, so we don’t do anything with it.

Opening the Suitcase

We’ve looked at basically all the code, except for the implementation of the WebRequestState class that we’ve been using as our “suitcase”. Here’s the base class:

/// <summary>

/// Base class for state object that gets passed around amongst async methods

/// when doing async web request/response for data transfer. We store basic

/// things that track current state of a download, including # bytes transfered,

/// as well as some async callbacks that will get invoked at various points.

/// </summary>

abstract public class WebRequestState

{

public int bytesRead; // # bytes read during current transfer

public long totalBytes; // Total bytes to read

public double progIncrement; // delta % for each buffer read

public Stream streamResponse; // Stream to read from

public byte[] bufferRead; // Buffer to read data into

public Uri fileURI; // Uri of object being downloaded

public string FTPMethod; // What was the previous FTP command? (e.g. get file size vs. download)

public DateTime transferStart; // Used for tracking xfr rate

// Callbacks for response packet info & progress

public ResponseInfoDelegate respInfoCB;

public ProgressDelegate progCB;

public DoneDelegate doneCB;

private WebRequest _request;

public virtual WebRequest request

{

get { return null; }

set { _request = value; }

}

private WebResponse _response;

public virtual WebResponse response

{

get { return null; }

set { _response = value; }

}

public WebRequestState(int buffSize)

{

bytesRead = 0;

bufferRead = new byte[buffSize];

streamResponse = null;

}

}

This is just all of the stuff that we wanted to pass around between our asynchronous methods. You’ll see our three delegates, for calling back to the GUI. And you’ll also see where we store our WebRequest and WebResponse objects.

The final thing to look at is the code, also in WebRequestState.cs, for the two derived classes—HttpWebRequestState and FtpWebRequestState.

/// <summary>

/// State object for HTTP transfers

/// </summary>

public class HttpWebRequestState : WebRequestState

{

private HttpWebRequest _request;

public override WebRequest request

{

get

{

return _request;

}

set

{

_request = (HttpWebRequest)value;

}

}

private HttpWebResponse _response;

public override WebResponse response

{

get

{

return _response;

}

set

{

_response = (HttpWebResponse)value;

}

}

public HttpWebRequestState(int buffSize) : base(buffSize) { }

}

/// <summary>

/// State object for FTP transfers

/// </summary>

public class FtpWebRequestState : WebRequestState

{

private FtpWebRequest _request;

public override WebRequest request

{

get

{

return _request;

}

set

{

_request = (FtpWebRequest)value;

}

}

private FtpWebResponse _response;

public override WebResponse response

{

get

{

return _response;

}

set

{

_response = (FtpWebResponse)value;

}

}

public FtpWebRequestState(int buffSize) : base(buffSize) { }

}

The whole point of these classes is to allow us to override the request and response fields in the base class with strong-typed instances—e.g. HttpWebRequest and HttpWebResponse.

Wrapping Up

That’s about it—that’s really all that’s required to implement a very simple HTTP or FTP client application, using the HttpWebRequest and FtpWebRequest classes in System.Net.

This is still a pretty crude application and there are a number of obvious next steps that we could take if we wanted to improve it:

- Allow user to pick # downloads and kick off simultaneous downloads, each with their own progress bar

- Prevent clicking Get File button if a download is already in progress. (Try it—you do actually get a 2nd download, but the progress bar goes whacky trying to report on both at the same time).

- Add a timer so that we can recover if a transfer times out

- Allow the user to actually store the data to a local file

- Log the results somewhere, especially if we launched multiple downloads

Why You Need a Backup Plan

Everyone has a backup plan. Whether you have one that you follow carefully or whether you’ve never even thought about backups, you have a plan in place. Whatever you are doing or not doing constitutes your backup plan.

I would propose that the three most common backup plans that people follow are:

- Remain completely ignorant of the need to back up files

- Vaguely know that you should back up your PC, but not really understand what this means

- Fully realize the dangers of going without backups and do occasional manual backups, but procrastinate coming up with a plan to do it regularly

Plan #1 is most commonly practiced by less technical folk—i.e. your parents, your brother-in-law, or your local pizza place. These people can hardly be faulted. The computer has always remembered everything that they’ve told it, so how could it actually lose something? (Your pizza guy was unpleasantly reminded of this when his browser informed his wife that the “Tomato Sauce Babes” site was one of his favorite sites). When these people lose something, they become angry and will likely never trust computers again.

Plan #2 is followed by people who used to follow plan #1, but graduated to plan #2 after accidentally deleting an important file and then blindly trying various things they didn’t understand—including emptying their Recycle Bin. They now understand that bad things can happen. (You can also qualify for advancement from plan #1 to #2 if you’ve ever done the following—spent hours editing a document, closed it without first saving, and then clicked No when asked “Do you want to save changes to your document”)? Although this group understands the dangers of losing stuff, they don’t really know what they can do to protect their data.

Plan #3 is what most of us techies have used for many years. We do occasional full backups of our system and we may even configure a backup tool to do regular automated backups to a network drive. But we quickly become complacent and forget to check to see if the backups are still getting done. Or we forget to add newly created directories to our backup configuration. How many of us are confident that we have regular backups occurring until the day that we need to restore a file and discover nothing but a one line .log file in our backup directory that simply says “directory not found”?

Shame on us. If we’ve been working in software development or IT for any length of time, bad things definitely have happened to us. So we should know better.

Here’s a little test. When you’re working in Microsoft Word, how often do you press Ctrl-S? Only after you’ve been slaving away for two hours, writing the killer memo? Or do you save after every paragraph (or sentence)? Most of us have suffered one of those “holy f**k” moments at some point in our career. And now we do know better.

How to Lose Your Data

There are lots of different ways to lose data. Most of us know to “save early and often” when working on a document because we know that we can’t back up what’s not even on the disk. But when it comes to actual disk crashes (or worse), we become complacent. This is certainly true for me. I had a hard disk crash in 1997 and lost some things that were important to me. For the next few months, I did regular backups like some sort of data protection zealot. But I haven’t had a true crash since then—and my backup habits have gradually deteriorated, as I slowly regained my confidence in the reliability of my hard drives.

After all, I’ve read that typical hard drives have an MTBF (Mean Time Between Failures) of 1,000,000 hours. That works out to 114 years, so I should be okay, right?